This is the third and final article in this series. Part 1 and part 2 describe how the concept of Godel numbers were first used to solidify the foundations of computing, then subsequently neglected by mainstream computing as it evolved until research at Hewlett Packard showed how the concept could lead to the caching of pure computation. In this final part I want to show the implications of this discovery for the future of IT.

The WWW has shown that resources on the network could be cached to reduce latency and bandwidth requirements. The web has a simplistic, idealised model of caching semantics because the rules must be transmitted with the data. For example it uses rules like: “you can assume this resource will remain the same for 1 day but after that you must check back with the server to see if it changed.”

Within a resource oriented system running within one server the rules can become tighter. Being tighter means that you can avoid unnecessary re-requesting but also know instantaneously when change occurs. The later is particularly important to ensure data integrity.

There are two aspects to the tightening of caching semantics in ROC. Firstly any resource can provide a software function that is used to determine when a resource representation is no longer valid. A good example of this is detecting when a resource from a filesystem changes by comparing the files’ timestamp against when it was read.

The second aspect becomes important when we have resources that are the result of computation on other resources - for example, if a resource is defined as the word count of a document. These computed resources validity are dependent upon their arguments remaining unchanged. So, in this example, the word count of a document must be recomputed if the document changes.

Dependencies can run deep. A page on a social networking site might composite data from hundreds of sources. Various fragments of the page may update on different timescales, some may be common across many users of the site. By hierarchically decomposing the page into resources, each part need only be recomputed when necessary to form the delivered page. This caching speeds up page delivery time and reduces load on the server.

Caching of fragments of a page is an example of how resource oriented computing can cache computation anywhere within a process. This opportunistic caching doesn’t need to be configured, anything can be stored. However it can only be retrieved whilst a response remains a valid representation of the current state of a resource.

Available memory within a server is used to store as many representations as possible. If available memory becomes filled then the cache can employ a process to free up space - this is known as culling.

Determining what to cull is based on an assessment of how valuable a particular representation is. This value is calculated based on if a representation is being re-requested and if so how frequently. Additionally, how much computing time went into evaluating each request can be valued. Using a combination of how often a representation is reused along with how much effort it took to create gives a good metric for what to keep and what to cull to free up space for new computations.

At first glance it might look like without sufficient memory to store all the potentially reusable computations a system’s benefit from caching would drop off markedly - systems working on datasets that far exceed the memory of cost effective hardware would show relatively poor performance.

However it has been shown time and time again that real world problems have far from uniform distributions of requests. What this means is that requests tend to be focused around hotspots of activity whilst large parts of a problem domain remain relatively untouched, at least over the short-term. Over the longer term different or new parts of the problem domain may emerge.

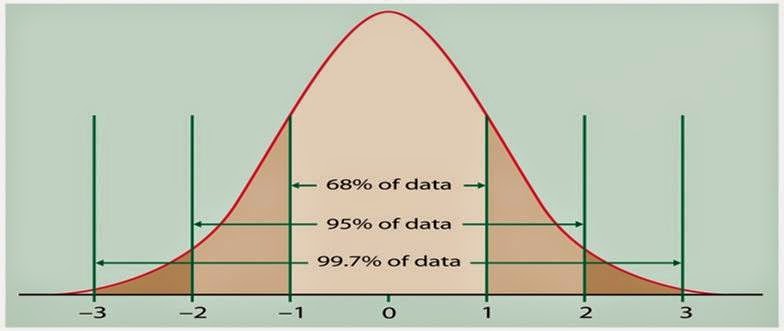

The non-uniformity of the request distribution is driven by two common phenomenon - normal distributions(1) and power-law distributions(2). A normal distribution is a distribution of data where elements of that data are centred around the mean point. Most of the data points are distributed close to the mean and less and less data points are found further away. Normal distributions are very common in natural systems.

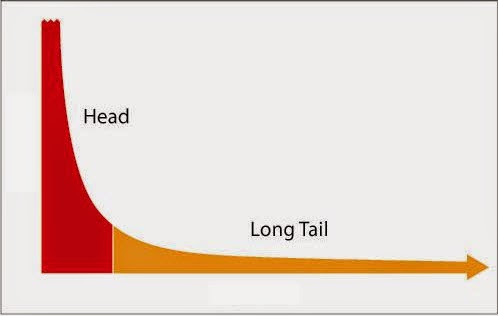

Power law distributions are more common in economic and artificial data sets. In a power law distribution you see the bulk of data points squeezed to one end of the distribution with the probability of finding data points further along the line falling off exponentially.

They team now had a practical computation cache that could perform well in the real world. This exciting result surprised the 1060 team. They had set out to change the development and maintenance economics of software, but had serendipitously also improved the runtime economics too.

Minimizing our Environmental Footprint

Green computing has a history dating back to the early 1990s with the Energy Star initiative. Now, with much of the computing power focused in large data centres these have been low hanging fruit for optimization.

Most data centres have now been designed to avoid the need for air conditioning.

Virtualisation technology on servers now allows several low intensity systems to share hardware resources.

Across the board approaches to reducing hardware power usage using low powered idle and energy saving modes helps not just in data centres but on our desktop and handheld devices too.

Deep below the surface there have been advances in optimising compilers and handcrafted code and/or hardware to solve specific high demand problems such as video encoding.

But today the low hanging fruit has been picked: the bulk of data centres are running bespoke systems where performance is a low priority after achieving correct functioning and minimising development cost. This is because most business problems can be scaled up by throwing more hardware at the problem and unless the solution must be massively scaled the costs of development outweigh hosting costs.

Resource Oriented computing changes the game. Not only does it make it quicker and cheaper to develop and evolve systems, it makes them naturally more compute efficient too.

As we move into the 21st century we are becoming more aware of the environmental impact of our actions. Simultaneously our dependence and usage of information processing systems is growing exponentially.

Already IT is consuming more power than the global aviation industry. Unlike other industries that are seeing incremental reductions in power consumption, computing is see incremental rises because despite performance/watt improvements increased demand is outstripping any benefits.

The resource oriented approach to software can lead to a step reduction in the region of 10x reduced computation(3) that can lead to near proportional reductions in power consumption.

1. http://en.wikipedia.org/wiki/Normal_distribution

2. http://en.wikipedia.org/wiki/Power_law

3. http://durablescope.blogspot.co.uk/2014/03/reducing-power-consumption-with-roc.html