I seem to find myself with a lot of unproductive time - time when I can’t be working on real problems - problems that need access to tools such as a coding environment, terminal, internet searching, and somewhere to capture notes. Situations such as walking the dog, driving, and getting simple chores done are examples of these times. Times like this though, are good for thinking, and recently i’ve been thinking a lot about the concept of technical debt.

It was a great insight by Ward Cunningham to find analogy between financial debt and the tradeoffs in software development. Usually the tradeoffs are between short and long term solutions. When a project makes a decision to implement a quick fix, or put off investing in a long term solution, that is like borrowing from the future, because if the project is to continue, that work must be done at some stage, otherwise it becomes harder and harder to continue. Short term solutions usually involve compromises that leave behind a mess. This mess must be worked around, and unless it is taken care of eventually you can’t manoeuvre without breaking something, or falling over. This extra incremental effort needed is like servicing the loan. It is, however, an interest only repayment, as eventually you need to repay the principal by implementing the long term solution.

There are, however, some ligitimate reasons for getting into technical debt. A good example is when a proof of concept is developed to de-risk something. There is no point working hard to achieve perfection. After all we will likely just throw it all away once we’ve understood the problem. It’s a little like trying to find our way out of a muddy maze. It’s not a good plan to put work into paving the paths to make our running around a little easier, as most of the paths will end up being dead ends, and our return on investment will be low. So you can see having some insight or prediction of the future helps in making the right trade-off.

Is it worth building paved path through here?

I’m really surprised that the concept of technical debt has not gained wider adoption beyond the field of software engineering. There are so many examples in everyday life.

One example is when the effort on multiple problems can be combined because they overlap somewhat. A perpetual domestic issue I have is that of loading the dishwasher. Eventually all the dirty items must go in, that’s a given. Leaving the piles of crockery filling the kitchen builds a debt mountain. However if you put them in one by one, that’s a lot of bending down, opening and closing the door. In addition we will likely need to move existing items around as we can’t globally optimise the layout if we don’t know what might be added later. Leaving the job of loading the dishwasher till later actually makes the process more efficient. If we couldn’t get into a little debt here by letting things stack up we’d be worse off. It’s like getting a loan to invest in productivity measures.

Why is the washing up not done?

At this level the analogy works quite well, but when you start to think deeper about the mechanism it’s not so straightforward. Who is the party offering the loan? Does the risk of our defaulting, when the outcome is never needed, play any part? Does a project start in debt? Is it possible to get a positive balance of account that can be used to offset future need for loans? - I don’t think it is.

I think a more correct, if less accessible analogy is entropy. Entropy can be a confusing subject but one that has deep ties into fundamental physics and statistical mathematics. In fact it is the only concept that provides any insight into understanding the forward flow of time - all other physical theories have no preference for time running forward or backward! A simple way to think of entropy is the amount of disorder a system has. We intuitively know how much easier it is to mess things up than to reverse the process and make things tidy and ordered.

The concept of entropy was first explored in relation to engines that convert heat to work during the victorian era. At first it was thought that heat energy could be converted to work without loss. Further practical advances slowed, and theoretical advances by Carnot showed a theoretical limit. Entropy was developed as a measure of how useful energy was. Extracting work from a system extracts pure energy without entropy, leaving existing entropy more concentrated in the energy remaining in a system. This makes it harder to extract more energy. Energy is always conserved, but it becomes less and less usable over time. Entropy can only increase, and increases come about through physical processes such as friction and dissipation. These tend to leave a system with all the energy in the form of heat that is uniformly distributed.

To overcome this one way decent into uselessness, engines need to be constantly supplied with new low entropy energy from a fuel. Also they need to have the excess entropy removed, this was usually in the form of cooling. Cooling transfers all that high entropy, along with heat, out of the system and into the environment.

You can view the systems of work that we as humans engage in as engines. Software projects are engines that take human intellectual energy and convert it into code deliverables. They consist of both physical and mental processes that require the input of energy and hopefully output useful artefacts as a result of our work. The amount of useful work that they produce is limited by entropy. This is provably true at the physical level of our bodies and the machines we use, but also appears to be true at the higher level of our information processes and structures. Why would this be true?

Again the mechanism consists of two parts: Firstly the ability to extract work from the system and, secondly, the work needed internally to keep the system organised and ready for further work. It is possible, in a low entropy, well organised system, to extract work immediately. However the process of extracting that work creates further entropy as well as concentrating the existing. Without further work to deal with this disorder our projects get cluttered with mess that slows our progress. We need to invest additional energy to organise and take out the garbage.

I see two factors behind the slowdown that entropy causes. Firstly, we have limited working space for our projects, and the more space that is taken up by clutter, the less space we have for work. Secondly, when the signal to noise ratio of our information resources within a project is low, we spend a disproportionate amount of time dealing with that noise. Let us look at these two factors in a bit more detail.

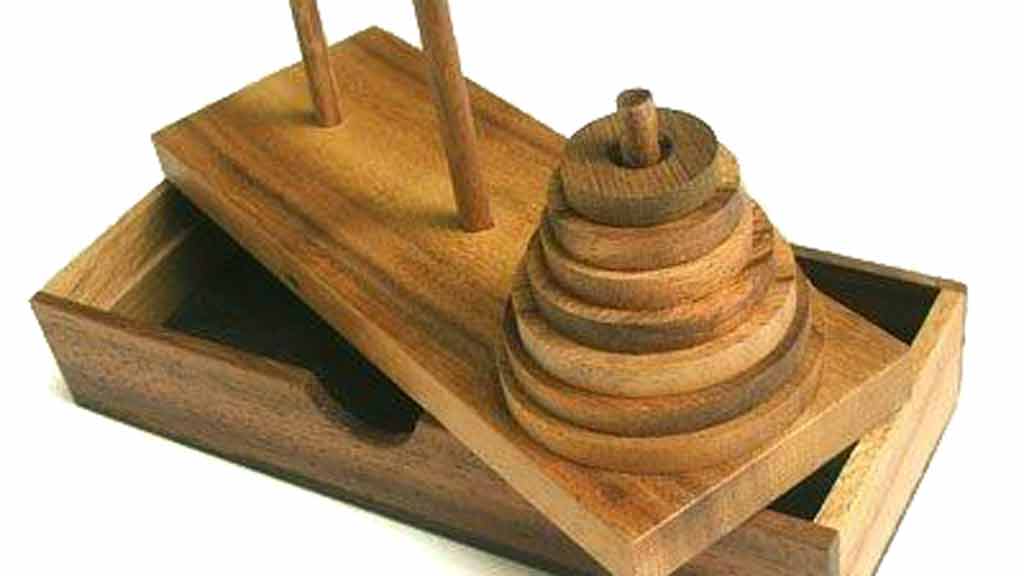

The Tower of Hanoi puzzle shows how much work is required to organise wooden disks when space is constrained. It is a very simple puzzle that involves transferring disks from one peg to another ensuring that the disks stay ordered. The number of steps needed to solve the puzzle drops dramatically as the working space, represented by intermediate pegs, increases. When clutter increases in a project we have less space, this is manifest in any problem when space is constrained, however even when we can create more space this next mechanism limits our productivity…

The Towers of Hanoi will keep you busy

Computer scientists classify processes and algorithms by their time complexity. Some simple processes take a number of steps proportional to the size of the problem - for example searching a list for a specific item. On average you will find the item half way through the list, so on average searching a list twice as long will take twice the time. The Tower of Hanoi puzzle we looked at above has a quite different time complexity. Every additional disk added to the problem doubles the steps it takes to complete it. If we need to juggle needless clutter in addition to valuable information, we can see how it’s going to take longer - sometimes much, much longer.