I’m not really sure why it’s taken so long but I’d like to introduce Polestar. I actually first started the project back in 2014. I got a couple of early adopters in January 2015. Since then it’s been used quietly without much fuss with a few updates along the way.

Background

I got the idea for Polestar at the 2014 Good Things conference in Bath, UK. I noticed a repeating pattern from the speakers - a kind of reference Internet of Things architecture. This architecture had 4 levels:

- sensors - capturing information but usually fairly dumb because there would be many and they would be cheap.

- hub - to capture data from sensors, upload to cloud and provide local autonomous feedback to control actuators along with other devices.

- cloud services - which receive data from hubs, store historical data and perform analytics.

- clients - apps and other user interfaces to present visualisations and control surfaces to users.

I made the connection between this architecture and the work i’d been doing with my home monitor project. Actually there was a disconnect. These reference architectures made the cloud services the predominant part. There are reasons for this:

- Vendors want your data. The monetisation of IoT is still embryonic and nobody wants to miss what may be the only revenue in the whole thing.

- It is hard to provide rich services from a low cost hub. After all it isn’t possible to store large amounts of data and have a large compute capacity at the price point needed.

- The hub might be behind a firewall or NAT router making it not directly accessible when outside the immediate network without specialist setup.

My home monitor project kind of merged the hub and cloud services concepts. There are a few good reasons for this too:

- Connection to the cloud is not guaranteed. It would be bad for critical control loops to pause if connection was lost. For example if heating control was lost in a residential property.

- Privacy is becoming of an issue as awareness rises. The biggest protest I heard when Nest was acquired by Google was “oh great, now google will know when I’m awake.”

Of course cloud services do have their place and I feel the trend will be towards cross-vendor integration products such as Comb9 and IFTTT.

Motivation

My original home monitor was hand crafted and specific to the sensors and actuators that I had. It had a fixed database schema for storing data and fixed code for reading obtaining the sensor values. It did have the concept of scripts that could be edited through the UI. These scripts could be used to generate visualisations of the data. Also they could be run at specific periods to implement custom actions on actuators that were coded into the application. An example of this was a script that turned by heating on and off dependent upon an algorithm that used who was at home, time of day and temperatures at various locations.

Being hand crafted is was a burden to experiment with novel technologies or automation ideas. Basically most things involved coding in an IDE and then uploading the new code to the server - which was and still is an old laptop in the loft.

What I wanted was an abstraction that was generic, that would have no specifics to my particular usage and which could be configured to work for anybody or any situation that required. Further than that the customisation could be done remotely through a web interface.

Idea

The first problem to solve was data persistence. By using a NoSQL database I was able to easily store the core time series data of the abstraction with new values added and taken away at anytime. I chose MongoDB. MongoDB could also store custom collections dynamically if needed.

I extended the concept of scripts to make them event driven. Events could sent periodically or when sensors changed value. In addition scripts could easily call each other for modularity. Scripts have access to both real-time as well as historical sensor values. In addition they can store state between invocations.

Scripts written in Groovy language. Groovy is a superset of Java and is byte-code compiled dynamically so it is very efficient.

Sensors are defined by a special script. Sensors themselves are just named values. They are only updated by scripts which can periodically poll or be triggered by outside events. Sensors can be numeric, boolean or vectors of the above. Scripts can also be updated to be in an error state. Notification scripts can be triggered when errors occur.

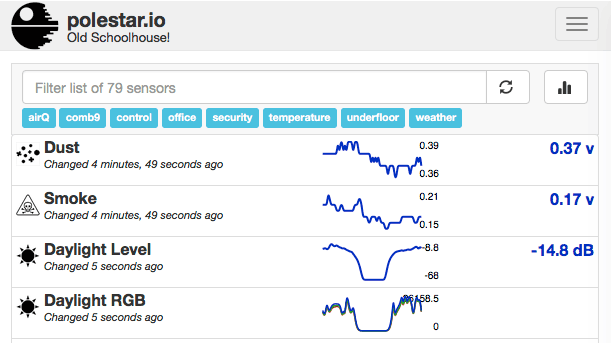

Realtime sensor values can be viewed on the sensors page. Historical values are show as tickers with configurable period (default 24h). Sensors can be filtered by tag or name.

Figure1: Sensor Page

Figure1: Sensor Page

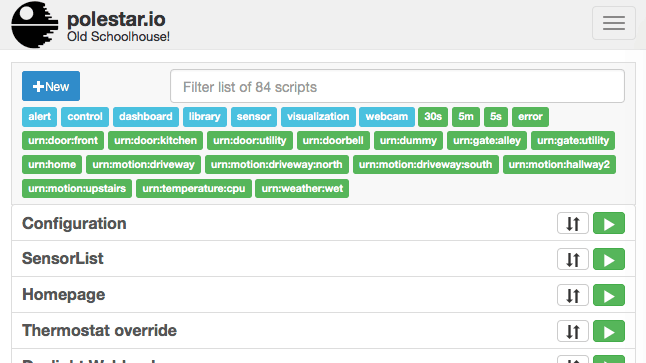

Scripts can be created and managed on the scripts page. You can filter by trigger actions, keywords or name. You can also reorder and execute scripts here.

Figure2: Scripts Page

Figure2: Scripts Page

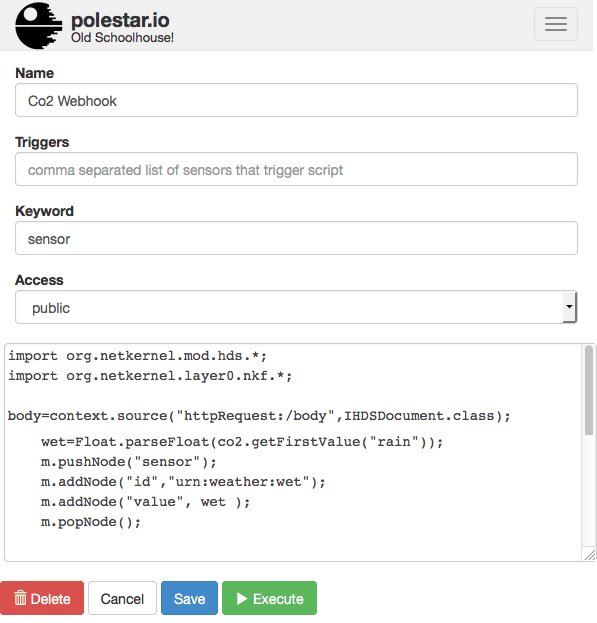

Scripts be can edited to specify triggers, set their access control and to change their Groovy source code. Access control levels are:

- secret - only admin user can view, edit and execute script.

- private - admin user has full access but guest can only view.

- guest - guest and admin user can view and execute script.

- public - anybody, including unauthenticated external services can execute script. This is useful for webhooks.

Figure3: Script Edit Page

Figure3: Script Edit Page

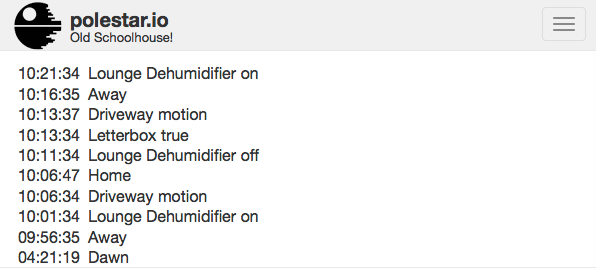

Polestar has an internal log which scripts can write to.

Figure4: Log Page

Figure4: Log Page

Polestar is a NetKernel module. I host it on an old laptop running Ubuntu but it will easily run on a Raspberry Pi. I expose it over HTTPS from my home router with dynamic DNS.

This simple set of functionality is all that is needed to provide a flexible and dynamic internet of things hub!

Further information

- Play with a live instance (on polestar.io) not my home monitor instance!

- Read detailed user guide.

- Download from GitHub.