“Cut to the chase” is something you’re only likely to hear me say if I’m enduring some kind of Dickensian wordfest drawn out from the changing of a single bit of information. However in this case I’m not the actor but rather it’s a new architectural endpoint for NetKernel that can keep your web apps on track and up-to-date. Let me explain.

Whilst working on the recent representation cache update I noticed that the standard pattern of issuing AJAX requests from the browser was causing a problem. The problem is that if they are slow to process then they queue up and all of the requests get processed sequentially. This can mean quite a wait to clear the queue. In my situation, which I believe is not atypical, the last request is the only important one. For example, the users switches tabs and the datatable on that tab needs to refresh. Or, the user is typing into a on-the-fly filtering field of a datatable. I’m not saying that intermediate queued requests are never important but rather that in this case they are not. So in this case what I needed was a “cut-to-the-chase” throttle.

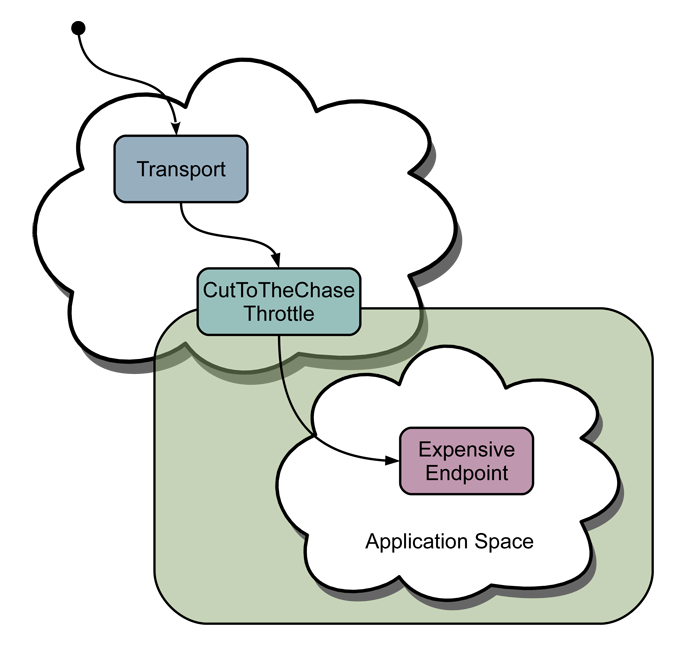

The CutToTheChaseThrottle is implemented as an overlay in the same way as the regular Concurrency Throttle. I call it a throttle because a throttle can queue and/or reject requests. In this case the throttle will only allow one request to pass at a time, regardless of it’s resource identifier or target endpoint, and if an additional request is received it will be queued. Once there is a request queued additional requests that are received cause the new request to be queued and the previously queued request to be rejected. The request is rejected by issuing a “Request Rejected” exception response. The currently executing request is unaffected. It might be considered nice to somehow cancel or kill the currently executing request and always start executing the latest received request. This however is not possible at the moment because there are no well defined semantics for terminating requests early except in the heavy handed way that the deadlock detector does (and that completely terminates the whole sub-request tree.)

Figure1: Throttle in ROC diagram

Figure1: Throttle in ROC diagram

Here is an example of operation. We have a scenario where 5 requests are issued 100ms apart. The endpoint that can process the request sits behind the throttle and takes 1000ms to process requests. We get a response back from the 5th request after 2000ms rather than the 5000ms it would take without the throttle. The test output has three columns, time, operation, request number.

0000 REQUEST: 0

0000 IMMEDIATE: 0

0000 START: 0

0100 REQUEST: 1

0201 REQUEST: 2

0202 REJECT: 1

0302 REQUEST: 3

0303 REJECT: 2

0404 REQUEST: 4

0404 REJECT: 3

1001 COMPLETE: 0

1001 RELEASE: 4

1002 START: 4

2002 COMPLETE: 4

Operation legend:

REQUEST: request issued

IMMEDIATE: request is immediately issued by throttle

START: endpoint starts processing a request

REJECT: throttle rejects request

COMPLETE: endpoint completes processing of a request

One additional feature is the ability to handle multiple throttles keyed on the value of some resource contextual to the request being processed. e.g. the remote host address of a client. This is achieved by specifying an optional key parameter. This parameter defines a resource identifier that will be sourced to obtain the key name of the throttle to use. Throttles are created and destroyed on demand so there is no management complexity.

Here is an example module.xml sample:

We will be shipping this throttle as part of the Enterprise Edition mod:architecture module in the new year. This throttle consists of less than 50 lines of code and many other kinds of throttle are possible, limited only by your imagination.