Dynamic programming is an approach to efficiently implementing certain classes of algorithms. As a feeble excuse for not noticing this earlier, the term is a little confusing: it isn’t about programming or about doing it dynamically! It was invented in the 1950s by Richard E. Bellman whilst working for RAND Corporation. Apparently he deliberately named it obtusely to avoid his work being considered research!

I discovered this term recently when looking into existing research about algorithm optimisation and specifically why the systemic memoisation in NetKernel can optimise solutions to certain problems particularly well.

It is a technique for structuring the implementation of an algorithm in order to find a solution that is more efficient than a naive brute-force approach. The approach involves recursively breaking a problem down hierarchically into overlapping sub-problems. Once these sub-problems are executed the results are stored in such a way that if the same sub-problem occurs again we can retrieve the solution rather than re-executing.

Not all problems in computer science are amenable to this approach but fortunately many common problems are. Typically the computational time complexity (Big O notation) can be improved. So for example from factorial time to exponential (for the travelling sales man problem) or from exponential to polynomial for subset-sum. Because the storage of results from sub-problems requires space often the space complexity for an algorithm increases.

Here is a quick summary of common problems that have more efficient solutions with a dynamic programming approach:

| Problem | Complexity | Summary |

|---|---|---|

| Travelling Salesman | O(n!) -> O(2n) | The shortest path between a set of points such that every point is visited and we return to the start. |

| Subset-sum | O(2n) -> pseudo-O(n) | Find the subset of a set of number which sum to a given number. |

| Compute Fibonacci | O(2n) -> pseudo-O(n) | Simply determine a single number from the fibonacci sequence. |

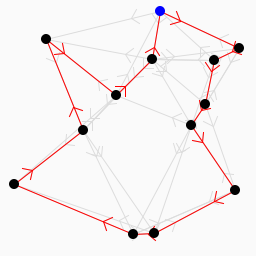

It is interesting to look at the reasons behind which problems can be optimised by dynamic programming and which can’t. If we first look at the divide and conquer approach to algorithms. This involves breaking down a problem into smaller sub-problems which can be solved in isolation and then combined to solve the larger problem. It also works in a bottom-up recursive way. The difference to dynamic programming is that these sub-problems do not overlap and hence there is no gain from the memoising and subsequent reuse of solutions to sub-problems. So the first important characteristic of dynamic programming is Overlapping subproblems.

Next we look at the greedy algorithm approach which on first appearance can make similar optimisations to dynamic programming. It works by recursing and calculating top-down. Seeing only the local optimisations at each step, it will only give a globally optimal solution if each sub-problem adds and diminishing contributions to the final solution. Dynamic programming on the other hand places a different constraint on sub-problems: larger sub-problems can be efficiently built from smaller sub-problems. This characteristic is known as Optimal substructure.

Stepping back to the Real World

The thing I find interesting and slightly curious about all the research in the area of computation complexity - and there is a lot - is that there has been very limited work in the area of optimising general computation - i.e. solving real world problems that can’t be so neatly expressed with a clean algorithm. Specifically by this I mean that the approaches we have talked about up until now are narrow algorithms. They solve specific problems that might form part of larger software systems. These problems have the benefit that they are more tractable. However in the context of a larger system they could be seen as just local non-optimal solutions.

Let me illustrate this with an example: Imagine we are selling doughnuts and our travelling salesman must visit many, say approximately 100, doughnut stands across London each morning. After midnight when we have received all our orders for the next day we could just run the standard travelling salesman algorithm and find the optimum path around the stands to visit each one with minimum driving.

Occasionally we onboard new stands and sometimes stands close permanently. In this case the problem remains largely unchanged in the sense that most of the vertices in our graph that forms the input to the TSP computation remain the same. What is exciting is that if we are solving the travelling salesman problem with dynamic programming then we would expect a large percentage of the sub-problems to remain identical. By looking at the larger context of the algorithm we can drastically effect the total computation time needed to solve the problem.

Now this raises a big question: How can we manage this stored state? This actually breaks down to two questions:

- How can we uniquely identify sub-problems in such a way that state can be stored and retrieved between multiple invocations?

- How can we manage the lifecycle of state? State comes into existence when a sub-problem is solved. But by what mechanism can we remove state so that we don’t have an unbounded space requirement? (Normally state is lost when the algorithm completes but clearly that would loose the benefits we’re discussing.)

Enter stage left NetKernel

The resource-oriented computing abstraction (ROC) embodied in NetKernel provides a framework for answering the questions posed above regarding managing sub-problem state.

ROC recasts sub-problems as resources. Sub-problems are not solved but instead we say resources are requested. Resources are identified by resource identifiers that codify all the inputs to a problem (also defined as resources) along with the algorithm or computation name to apply to the inputs. Resources, or more correctly classes of resources, are implemented by real software code called endpoints. Endpoints may request other resources - problems may recursively compute using solutions from sub-problems.

By requesting resources that have well defined identity, ROC provides a natural place to inject caching to store and retrieve results. This allows a cached result to be transparently used in place of actually executing a sub-request by an endpoint. The result: overlapping sub-problems are not re-executed.

As we mentioned above, managing lifecycle is the second part of the state management issue. NetKernel provides a bounded cache to store the responses from resource requests. A value function is defined to determine which responses are removed to maintain the size. This value function uses metrics including cost to compute and re-request count. The cache ensures that we make the best use of the available space to store sub-problems. The solutions to the sub-problems that are actually stored will be the ones that are most useful.

There are some other interesting characteristics of the NetKernel cache such as it’s dependency model for invalidation of responses. Additionally it’s worth noting it’s approach to identifying the bounding scope of a resource that requires sub-requests resolved within. If you are interested you can find more details in my previous post Caching: How and why it works.

Modelling problems in ROC

It turns out that the resource oriented way of modelling systems inherently leads to a recursive functional decomposition. We encourage thinking about the rates of change of information and using this as natural boundaries for resources. This leads to the right kind of sub-problems to enable maximal or close to maximal sub-problem overlap.

The example I like to use is the one of a simple news website. The homepage is composed of a number of sub components. It might have a summary of top headlines with snippets, a list of categories, a list of most read stories, a weather report and a bar with login information and personal account status. Each of these components changes at it’s own rate driven by new publications, periodic back-office actions or for different users. Each of these components themselves may also be further composed from multiple sub-parts.

Whilst serving this page to visitors over time we will find that most of the components remain unchanged for many requests. For example we may only need to update the weather report each hour. The sub-problem of the weather service widget can be retrieved from cache without further re-computation for all visitors. When the weather component is out-dated only then do we need to incur the cost of requesting the third party weather feed and formatting it into our component.

Summary

Implementing software systems in a resource-oriented way leads to very compute efficient solutions. This is explained through the understanding that the resource-oriented abstraction combined with the NetKernel cache behaves in a manner directly analogous to the dynamic programming approach.